I just wrote an Django application to separately import content as Blog post, images inclusive. There is an option to trigger the importing via console (using command via manage.py). However, right after having all post content and related images shown inside the admin area, ready to be edited or published, all newy imported images don't appear properly. They are marked as folder and no thumbnail along.

Although there are some information about the issues when combining S3 with Mezzanine and filebrowser, those all seem to be resolved at the moment (great work, guys!)

One notice that uploading images via admin interface still works nicely. Images are recognized and thumbnails rendered as usual.

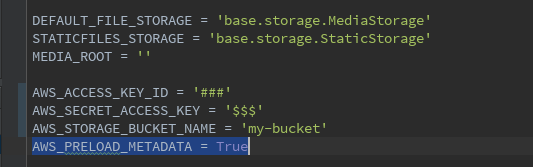

After times tracing down code in the filebrowser, django.core.files.storage, s3boto,... I found out that the reason originates from AWS_PRELOAD_METADATA, a setting of django-storages-redux. When that configuration is set to True, an in-memory dict (of the current storage object) called _entries holding complete node metadata inside the bucket. This is loaded once at the start of Django instance and accordingly updated after every S3 actions.

The problem stays in the instance separation of current site and the import command. After any successful import, metadata is updated to the storage of command instance while at the main instance, _entries property is kept unchanged. Hence, all new files, when being browsed, are determined as folder (by S3boto) because their names are not found in storage metadata.

Solution:

- Create a new view: Here I set it as POST only, with @csrf_exempt decorator. This will set _entries to None and call entries getter again to recollect latest bucket node metadata.

- Call the view at the end of importing process to make sure all new image thumbnails will be generated.